My name is Daniele Clark, I’m from Florida, but I travel most of the year in two bands so I feel like I’m “from” the road. Today I’m going to be sharing a little about Synthesis, it’s one of the fundamentals of sound. I think the more you learn about it, the more you understand sound and music.

Louden Stearns described it this way “Once I started learning about synthesis, I started getting a language for timbre and a language for sound, and I started hearing things a little differently. Because, we don’t really have a natural language for how to describe how a note evolves or how it starts and ends. How the timbre sounds different between an oboe and a violin. It’s hard. We don’t have words for it.”

When he said that I thought, that’s how I feel about much of what I’ve learned about the technical side of the recording and mixing process.

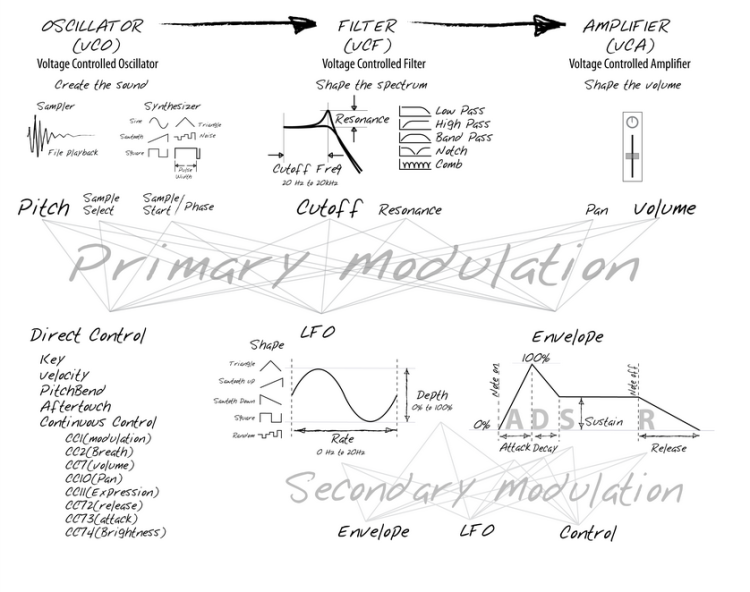

Today I will be talking about the 5 modules that are the fundamentals of synthesis. Here’s an illustration I’ve borrowed that will hopefully help you track with me.

- Oscillator

- the (VCO) or the Voltage Controlled Oscillator is what creates the sound. We could call it the sound creator- It creates the sound based on a square wave or a saw tooth wave form or some other geometric wave form. It Usually comes across as a buzzy, bright, kind of agressive sound.

- Filter

- the VCF or the Voltage Controlled Filter is most commonly a 24db Low Pass Filter though it’s often just referred to as a filter. A low pass filter is the most important type of filter though other Filters are High Pass Filter’s and Band Pass Filters.

To put it in more visual terms it works something like this… The Oscillator Creates or produces the sound – Much like your voice – Then a filter moves the sound or Oscillation. The filter uses pulses to move the sound wave and manipulate the voice, noise or oscillation. The filter removes excess high end making the oscillator sound more like a real live sound or instrument.

- Amplifier

- the VCA or the Voltage Controlled Amplifier controls the volume of the sound over time and controls how it develops over time. While the filter was shaping the sound, the Amplifier is important because of the time factor and because synthesis moves.

So the filter is similar to the EQ, the difference is a synthesizer filter is

meant to move over time.

The amplifier is sort of like a gain knob or a volume fader, the synthesizer

amplifier is designed to move quickly over time.

And it’s development over time, the change over time in synthesizer language, is called modulation.

There are three kind of main modulators.

- The first is you – As you manually move knobs and manipulate sound over time. Then there are two really important types of algorithmic modulators, ways that you can almost give instructions so that the synthesizer itself controls other parameters within the set.

-

LFO –

The LFO creates cyclic variations in any other parameter. The LFO often controls the pitch of the oscillator

-

Envelope –

the envelope creates kind of a shape that runs every time a key is pressed.the envelope, almost always controls the main amplifier, which is how we can make the amplitude change over time. To give it a percussive or a sustaining shape

Perhaps it would have been best for me to make a video, to best portray all that I’d like to about these basic’s of Synthesis… I personally have found the learning process fascinating for myself. I have loved music all my life, I grew up around it in studio’s, live venues and at home… Being able to visualize and put names to all the things I’ve always heard and perhaps never fully understood is a very rewarding experience. I hope this gives you a little insight into why you hear things the way you do!